User experience makes all the difference in 3D robot vision. Here’s why.

User experience (UX) has become an intrinsic part of our everyday life. It is the bridge between us and the advanced technology we use daily, making it not only accessible but also enjoyable and efficient to use. UX is there, in essence, to take away the friction between what we want or expect to happen and what actually happens.

The role of UX in 3D robot vision is no less important. As these advanced automation systems become more prevalent in industries ranging from manufacturing to logistics, the ease-of-use and intuitiveness of their interfaces can make a significant difference in their effectiveness and adoption rate. Excellent UX benefits various stakeholders. System integrators experience short ramp-up times to become experts in various automation scenarios. End users save time and money by being able to configure new parts themselves, which is particularly valuable in high-mix low-volume applications. Furthermore, everyone benefits from simpler onboarding and knowledge transfer, resulting in knowledge retention and continuity.

Nonetheless, 3D robot vision still has the undeserved reputation of being difficult to use and understand. The good news is that that isn't necessarily the case, at all. In this post, we will discuss our take on what is great UX for 3D robot vision and demonstrate some best and worst practices.

From application knowledge to a working solution, with minimal friction and expert knowledge

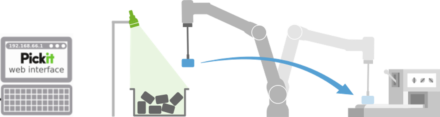

Imagine the following scenario. In your factory, an opportunity has been identified to automate the picking of randomly placed objects from a bin and placing them onto a conveyor belt to load a machine, like in this picture:

The application is crystal clear in your mind, and you can probably demonstrate and explain it to someone very quickly:

“In this bin, look for these parts, which should be picked like this, and feed the machine like so.”

This is called application knowledge.

Now consider you want to automate this task with 3D robot vision. There are four main things to specify to the 3D vision system to be able to execute it:

1. Where to detect: In this bin,

2. What to detect: look for these parts,

3. How to pick: which should be picked like this,

4. Move the robot: and feed the machine like so.

However, there are a number of things that a person must learn in order to use 3D robot vision that have nothing to do with application knowledge, such as setting up the hardware and software and learning how to use them. This is called expert knowledge.

We believe that the ease-of-use of a technological solution, and the quality of its user experience will improve by minimizing

the friction to specify application knowledge;

the amount of expert knowledge required.

So far the theory. How does that work in real life?

3D robot vision: Bad, good and best UX practices

Let’s take a look at a number of practical steps in the actual experience of setting up and using a 3D vision solution, along with different ways to achieve them with better or worse UX.

Step 1: Setting up and accessing the 3D vision system

Obviously, even before transferring application knowledge to a 3D vision system and using it, you need to be able to access it. And there are bad, better and even better ways to facilitate this for end-users:

Bad UX: The system is a black box, and you need to call an expert to set it up on-site or bring the system online for an expert to connect.

Better UX: The system has a user interface (UI) that allows you to set it up. You might need to download and install software for a specific operating system.

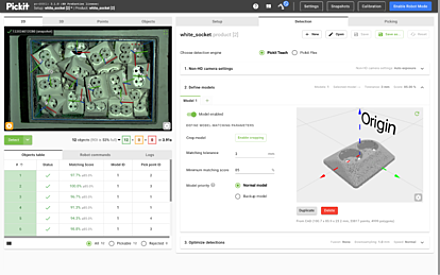

Even better UX: The UI runs directly on a web browser, no need to install anything.

Step 2: Specifying application knowledge

Once you’ve set up and accessed the 3D vision system, you can start specifying application knowledge. So, let’s go back to the pick and place application you identified in your factory, and the four things you need to specify to make it work:

1. Where to detect

The first thing to specify is where objects need to be detected; in this case, inside a bin. Again, there are worse and best UX practices for 3D robot vision:

Bad UX: There is no way to specify where to detect. If there were two bins visible to the camera, you have no way to tell the system that you only want to pick from one.

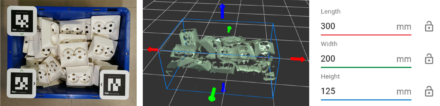

Better UX: You can specify where to detect, based on numeric inputs.

Even better UX: You can teach the system where to detect by showing it to the camera and can interactively fine-tune the specification from software.

2. What to detect

The following step is object detection. Because production flexibility and agility are critical benefits that a 3D vision system must provide, its ability to quickly learn new objects makes all the difference.

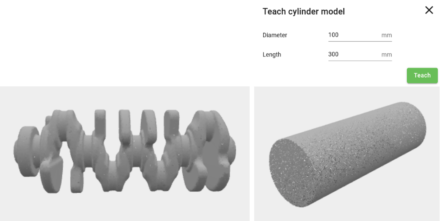

Bad UX: Data about the part to detect like images or the CAD model needs to be sent somewhere for a lengthy training period that can last hours to days.

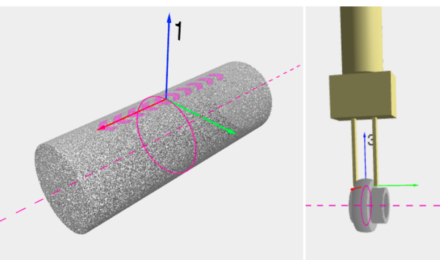

Good UX: You can interactively teach the part to detect by either showing it to the camera, uploading a CAD file, selecting a shape type (cylinder, box) and dimensions, or even applying AI recognition. The feedback loop is fast, and you can re-teach or modify the part model on the spot.

Application knowledge about how exactly a part should be picked, can equally be transferred with more or less friction and effort:

Bad UX: Pick points are specified manually, one by one.

Better UX: Pick points are specified interactively, can include symmetries and can tolerate some variation in their location.

Even better UX: The system can automatically propose pick points for your part, that you can override or modify if needed.

4. Move the robot

The last piece of information about the application that needs to be transmitted to the 3D robot system is the way the robot should move, in this case to place the object on a conveyor belt. And as you guessed, there are worse and better ways to enable this:

Bad UX: Robot programs need to be re-programmed manually every time a different application is deployed. Best practices are hard to carry over from one application to the next.

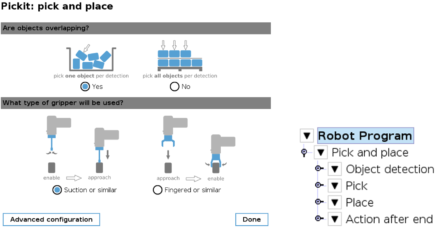

Better UX: Ready-to-use example programs are available for different types of automation scenarios.

Even better UX: Ready-to-use templates for common skills like pick and place in a high-level interface that hides complexity and promotes best practices for efficient and robust execution.

Step 3: Getting effective support

The best support is the one you don’t need. A comprehensive knowledge base and product training can take you a long way in this respect. But maybe at some point you will have questions to ask, or something might not work as expected. Even then, smart system features can significantly improve your support user experience.

Worse UX: You email and call the support team back and forth.

Better UX: Save "snapshots" of your 3D vision results so that the support team can replicate your exact conditions and provide you with the most relevant feedback.

Lowering the barrier by optimizing user experience

Since users are the core of any technology, and 3D vision in particular, lowering the entry barrier is essential to both empowering them and increasing the adoption of 3D robot vision across a variety of industries. Although this is a relatively new technology, consumers do not require extensive technical understanding to use it efficiently. At least, that’s how we at Pickit 3D conceive our solution.

We believe that the secret to unlocking the full potential of 3D robot vision technology is by combining state-of-the art functionality with an amazing user experience. How we achieve UX is based on years of experience and more than a thousand deployments, and we continue to develop new improvements.