3D Robot Vision Across Automation Scenarios

A dive into Bin Picking, Depalletization, and Robot Guidance

In the world of automation, 3D robot vision is emerging as a powerful ally, revolutionizing efficiency and productivity across industries. The beauty of this technology lies in its versatility - it can be tailored to a plethora of processes. However, to unlock its potential, it's crucial to understand the nuances of different automation scenarios and how they influence the implementation of 3D robot vision systems.

Why does this matter? Simply put, the role and application of 3D robot vision can vary greatly based on the specific automation scenario. Your needs from the 3D vision system, and where it fits into your production line, will dictate the type of hardware, software and support you require. This isn't just about economics - it's about ensuring a smooth implementation and optimal end-user experience.

In this post, we'll explore three common automation scenarios: bin picking, depalletization, and robot guidance, and their idiosyncrasies in terms of detection, picking, accuracy, and robot programming. Detection deals with finding the desired parts in the camera image. Picking is concerned with how to pick a part (where, with which tool, collision avoidance). Accuracy refers to the application requirements for accurate part location. Robot programming relates to best practices on how to structure a 3D vision enabled robot program.

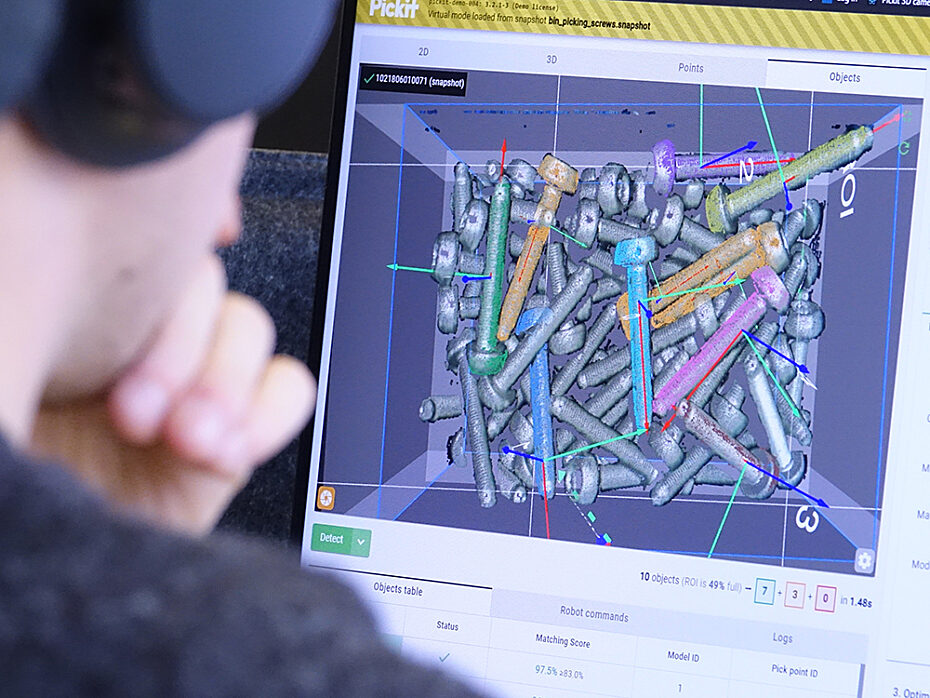

Bin Picking: repeated detection of randomly oriented parts

Bin picking typically occurs at the beginning of the production or automation line. Automating bin picking with 3D Robot vision is very often implemented to replace low value work of taking objects/parts out of bins or other recipients to feed a line. Today, still up to 38% of factory labor force time is spent on this daunting task for starting processes in manufacturing industries.(1)

3D robot vision steps in here, essentially providing robots with the ability to 'see' objects, enabling them to efficiently pick randomly oriented parts from bins and place them onto a CNC machine, conveyor belt, or tray…

When it comes to detection, the bin picking process has its own characteristics affecting the 3D vision configuration:

it involves multiple parts that can be randomly oriented or positioned in a bin or tray.

Parts are detected by reasoning about their 3D shape

The process involves repeatedly detecting parts, as picking one part may disturb the location of neighboring ones. So even if multiple neighboring parts are found in a single detection, multiple detections are often required to pick them.

The picking part, unsurprisingly, is critical and very specific as well for the bin picking process.

An adequate robot tool is required

There are multiple options for picking a part, and checks like collision avoidance to assess the feasibility of a pick.

Strategies are defined for ordering picks when multiple parts are detected, such as picking the highest parts first.

In terms of accuracy, a 3D robot vision system for bin picking may have different requirements depending on the application. Generally speaking, smaller parts need higher accuracy, larger parts need less. Certain part and tool combinations can also reduce the required accuracy.

Concerning robot programming, two important aspects are to be considered:

Bin extraction is typically the only unstructured part of the application (it’s different for every part), with part dropoff being similar. A 3D vision solution can help the robot find an extraction path that is feasible: both reachable and collision-free.

Cycle time can be optimized by masking detection time with robot motions.

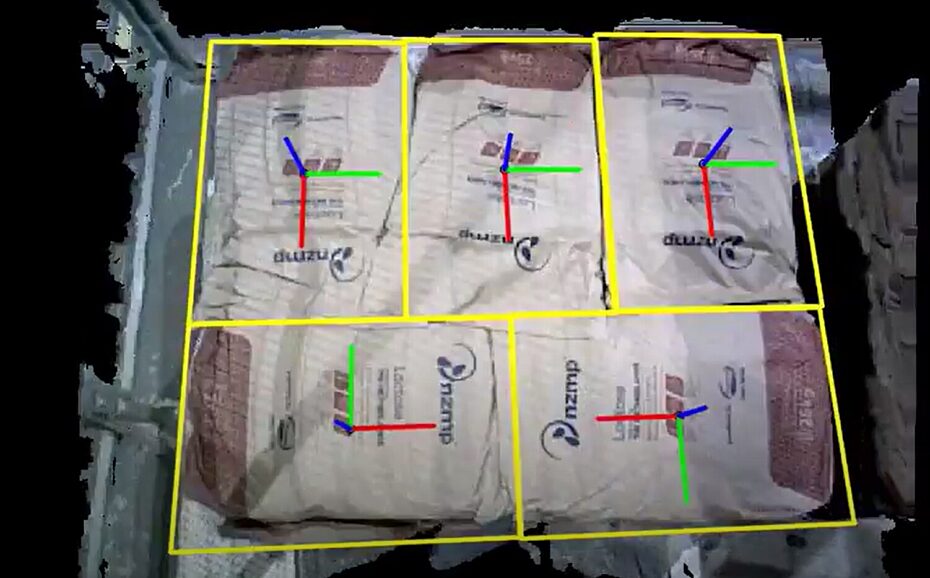

Depalletization: use of patterns and color information

Depalletization shares a kinship with bin picking but brings its own unique requirements to the table. Like bin picking, it usually occurs at the beginning of a line and aims to alleviate the strenuous task of moving boxes, buckets, bags or totes from a pallet.

And just like bin bicking, the depalletization scenario is about picking and placing, where the 3D robot vision handles the detection and picking of the objects. However, both in the detection and picking part, there are some important differences with the bin picking process.

For detection, the 3D robot vision solution for depalletization has its own specificities

It handles multiple semi-structured, somewhat larger objects in a layered pattern

Picking one part will typically not disturb its neighbors, so you can detect once and pick multiple parts.

It uses color information in addition to the 3D shape, especially when dealing with mostly flat parts such as boxes.

The picking part of the depalletization scenario has more basic requirements compared to bin picking:

Picking is often done with four-axis depalletizing robots, which can only do vertical picks (without tilt).

When multiple parts are detected, there are ordering strategies for picking them, but they are different from those of bin picking. Pallet layers are typically emptied following a specific pattern or direction.

Depalletization doesn't require collision avoidance as bin picking does, because parts typically point up, don't overlap, and there is no container structure like the bin.

As parts to be picked and placed are usually larger in size, the depalletization process requires less accuracy than bin picking or robot guidance.

Depalletization robot programs need to take into account application specific constraints, such as:

Sometimes there’s a separator between layers that must be removed, and the layer above it must be fully empty before removing it.

When using robot-mounted cameras, the detection point moves down as pallet layers are emptied.

Similar to bin picking, cycle time can be optimized by masking detection time with robot motions.

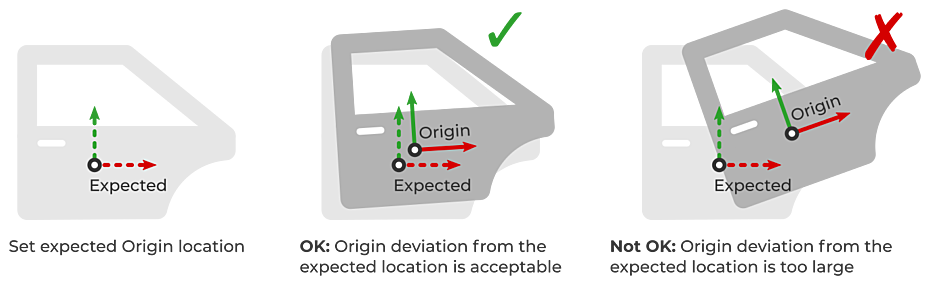

Robot guidance: Expected Location and User-Frames

The third 3D vision scenario, robot guidance, is something entirely different as it is no longer a pick and place application. Without 3D robot vision, robot guidance for part processing is still labor-intensive for industries such as automotive and consumer electronics. Very often, the 3D vision automation case is triggered by the need to improve product quality and reduce human intervention or error.

Automating with 3D robot vision of robot guidance usually occurs in the middle of the line on a conveyor or pallet without fixture. This application scenario could involve a robot sanding, deburring, welding, sealing or glueing, guided by 3D robot vision.

So what does a 3D vision system do exactly in this scenario? Again, it gives the robot eyes, focusing on detecting the object, even when its location changes slightly.

This application scenario is different from bin picking and depalletization in that there is no picking, and that detection involves a single and usually large part on a conveyor or pallet.

The expected location of the part is usually roughly known, and the 3D detection pinpoints its location such that the relevant operation can be performed with accuracy. Sometimes if a part is found to deviate more than a certain amount from its expected location, the operation should not be performed. For example, if the part is too distant, some motions might be too far for the robot to reach.

As robot movements need to be exact, guidance for part processing typically requires high accuracy.

Robot programs for these applications rely on the so-called user-frame, a user-defined coordinate system fixed to a part. The robot programmer teaches the trajectory of an operation to perform relative to it. When a new part arrives at a different location and is detected by the 3D vision system, the taught trajectory can be translated accordingly.

Unlocking the potential of 3D robot vision

3D robot vision is no less than a transformative technology in automation. And yet, how it is set up and used is heavily influenced by the specific automation scenario at hand. Bin picking, depalletization, and robot guidance each present unique requirements and challenges.

Understanding these nuances is key to unlocking the full potential of 3D robot vision, ensuring a smooth implementation, making sure that end users have the best experience and guaranteeing fast return on investment.

For more information on 3D robot vision and automation scenarios, visit our website or our extensive knowledge base.

(1) The Robot report